CVE-2026-20820 is a vulnerability in the Common Log File System (CLFS) driver that was included in the January 2026 Patch Tuesday. The advisory lists it as a heap overflow that can be used for EoP.

CLFS.sys has a rich history of vulnerabilities and exploits, but a lot of them historically have involved modifying or corrupting the BLF files parsed by the driver. I hadn’t looked into CLFS in much detail before, so I thought doing some n-day analysis would be a good framing exercise to gain some familiarity. I also noticed in the docs the mention of Authentication Codes, a relatively recent hardening feature that was added with the intent of mitigating the type of exploits that modify the BLF files. So I was curious as to what other areas of attack surface were still yielding bugs that are capable of being used for EoP.

It was clear from many of the writeups of previous vulns and associated exploits that there’s a fair bit of complexity in CLFS, along with quite a range of undocumented (officially) types - so I was braced for the vulnerability analysis to be a bit of a mission. As it turned out though, the lack of changes in the diff made it quite straightforward…

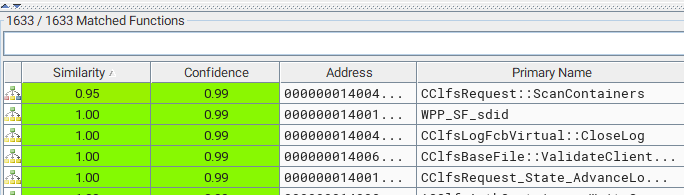

Diffing

Only one changed function. That’s handy.

Vulnerability Root Cause

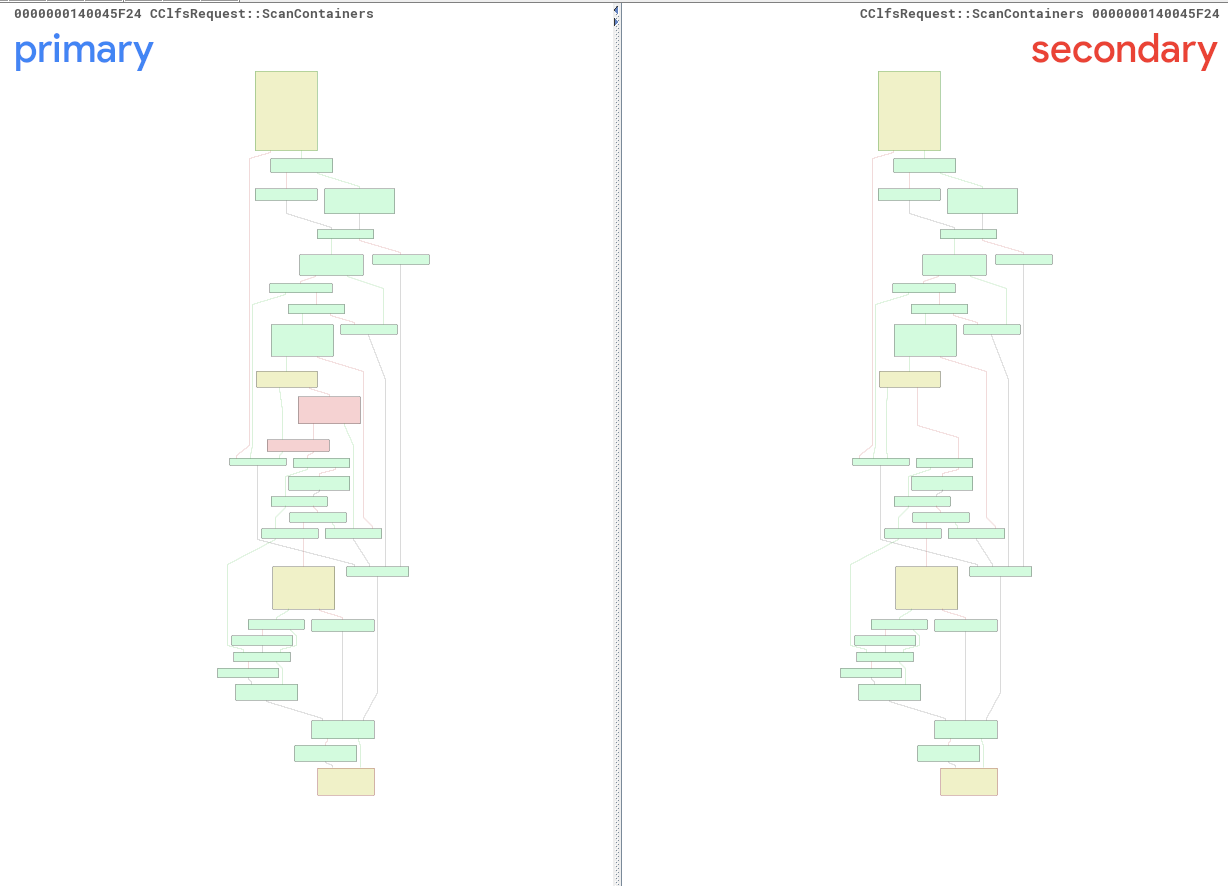

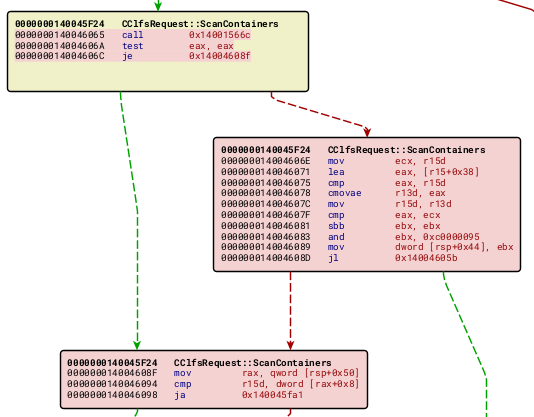

So in the CclfsRequest::ScanContainers function, we have the addition of two blocks. If we take a quick look at the assembly in those blocks, just within BinDiff, we can get an early sense of what bug we’ll be looking for when we move over to the decompilation:

Upon entering this new block, r15d will be holding a value taken from the input buffer (we’ll explore what exactly, soon), or it will hold 0xffffffff if that user value exceeds 0xffffffff. What we then see is essentially a user input value having 0x38 added to it, and this value being bounds checked. There is a check to determine whether or not the value has wrapped around, that will result in a 0xc0000095 error, indication an Integer overflow.

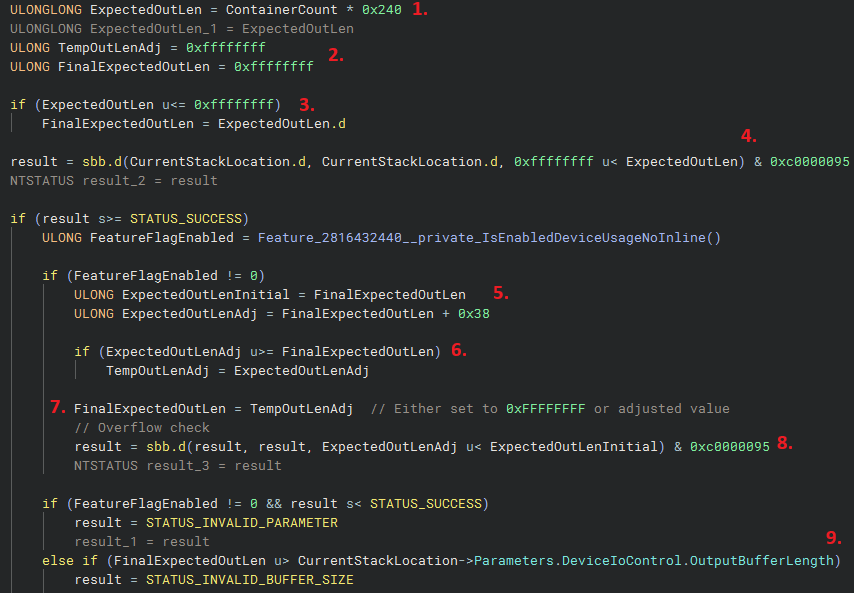

It’s probably easier to move over to the decompilation to dig into this further, and when we do it’s pretty obvious where this additional block is, both from the check of the value adjusted by 0x38, and also the feature flag check function call, commonly used in staged rollouts of patches.

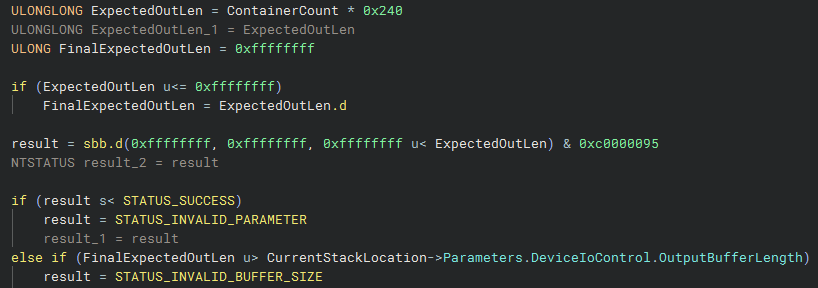

We still haven’t gone over the context surrounding where we are in the driver and what objects are being handled, but I’ll quickly go over the patch because it’s really just a bounds-checking issue.

At 1. it’s important to know that ContainerCount is the user supplied value that I alluded to in when looking at the assembly. This count value is taken from the input buffer, and as it probably obvious at this point, is taken as to specify how many objects the caller is querying information about. This count is multiplied by 0x240, which is the size of the container objects being returned. These objects are of type _CLS_CONTAINER_INFORMATION. So in effect, for this expression is intended to result in the expected length of the data being retrieved and written to the output. It’s worth noting this value is 8-byte integer.

At 2. there are two variables, at least in the way Binja is displaying the decompilation, with the important point being they are initialised to 0xFFFFFFFF, the maximum size available in a 4-byte unsigned integer.

At 3. and 4. the calculated expected output length is checked against this upper bound of 0xFFFFFFFF, and if it’s of a lower value, then the calculated value is fine to proceed with. If it’s greater (or actually equal to), the check at 4. results in the INTEGER_OVERFLOW error being returned.

At 5. the calculated value is retained in a new variable, and a new variable is initialised that holds the value with the addition of 0x38. This adjusted value is compared to the initial value at 6., and this only really makes sense to me as an additional overflow check. Interestingly though, I don’t actually think this value could overflow, as 0xFFFFFFFF when divided by the 0x240 size doesn’t land close enough to the upper boundary to be overflowed by the + 0x38. Regardless, it is expected that the adjusted length will be greater than the initial value, and if that is case then at 7. our “final” variable is filled with the adjusted value. 8. shows that if there is an overflow, the INTEGER_OVERFLOW status is returned.

And finally, at 9., there is a key check, that this adjusted value is greater than the OutputBufferLength passed in the I/O call to the driver.

Here is a comparison with the same function, pre-patch:

So yeah - there are no checks made against the ExpectedOutLen with the addition of the 0x38 bytes. So we can reasonable assume that we likely have an overflow of some sort by up to 0x38 bytes past the end of a buffer.

So what is the 0x38 bytes?

Well, it’s the context object for the I/O call - specifically, CLS_SCAN_CONTEXT. It is read from the “output” buffer, as essentially the input to the call, and then that same buffer is used to copy the output into. This might seem confusing given the separation of input/output buffers in DeviceIoControl calls, but it is related to the I/O method used for this specific call. The CClfsRequest::ScanContainers function is reached via the IOCTL 0x80076816. Once decoded, this code specifies the buffer method as METHOD_OUT_DIRECT. What this means it that:

- Input buffer = Buffered I/O

- Output buffer = Direct I/O

When Direct I/O is selected for a buffer management method, the I/O Manager brings the user supplied buffer into physical memory and locks it so it can’t be paged out. The I/O Manager the prepares an MDL and assigns a pointer to it to Ipr->MdlAddress. I’m not sure if this is actually documented by Microsoft anywhere, but Pavel at least points out in his kernel programming course that the Direct I/O output buffer really can be (and clearly is) used as both the input and the output buffer in certain cases.

The CLS_SCAN_CONTEXT object has the following definition:

typedef struct _CLS_SCAN_CONTEXT {

CLFS_NODE_ID cidNode; // 0x0

PLOG_FILE_OBJECT plfoLog; // 0x8

ULONG cIndex; // 0x10

ULONG cContainers; // 0x18

ULONG cContainersReturned; // 0x20

CLFS_SCAN_MODE eScanMode; // 0x28

PCLS_CONTAINER_INFORMATION pinfoContainer; // 0x30

} CLS_SCAN_CONTEXT, *PCLS_SCAN_CONTEXT, PPCLS_SCAN_CONTEXT;

So the root cause of the vulnerability is that, when calculating whether the requested number of container objects fits within the size of the output buffer, the function does not take into consideration that 0x38 bytes of that output buffer are already taken up by the preceding context object.

This means that the last 0x38 bytes of a CLS_CONTAINER_INFORMATION object can be written outside the bounds of the output buffer. If we look at the CLS_CONTAINER_INFORMATION definition:

typedef struct _CLS_CONTAINER_INFORMATION {

ULONG FileAttributes; // 0x0

ULONGLONG CreationTime; // 0x8

ULONGLONG LastAccessTime; // 0x10

ULONGLONG LastWriteTime; // 0x18

LONGLONG ContainerSize; // 0x20

ULONG FileNameActualLength; // 0x28

ULONG FileNameLength; // 0x2C

WCHAR FileName[CLFS_MAX_CONTAINER_INFO]; // 0x30

CLFS_CONTAINER_STATE State; // 0x230

CLFS_CONTAINER_ID PhysicalContainerId; // 0x234

CLFS_CONTAINER_ID LogicalContainerId; // 0x238

} CLS_CONTAINER_INFORMATION, *PCLS_CONTAINER_INFORMATION;

This ends up being the State, PhysicalContainerId, and the LogicalContainerId, and the final 0x28 bytes of the FileName.

These fields are written to a couple of functions later, in CClfsBaseFile::ScanContainerInfo.

Exploit Feasibility

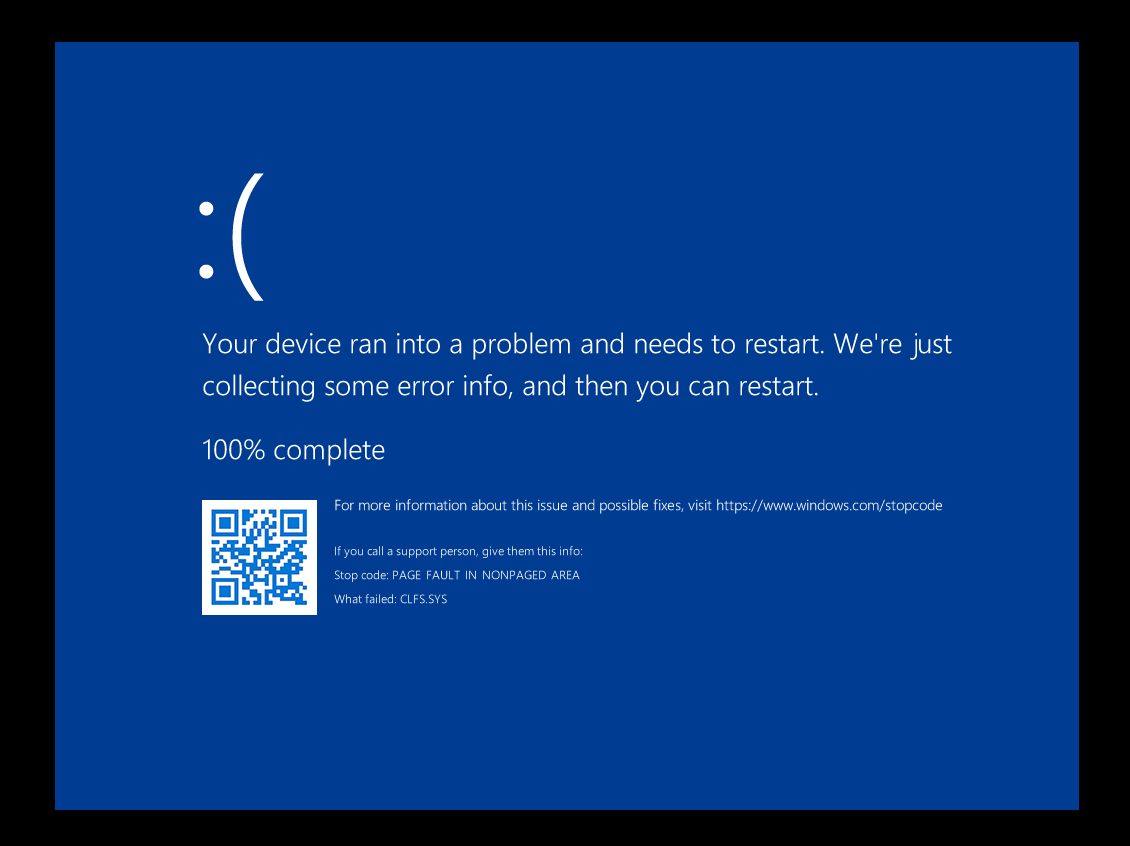

We can quite easily get this to cause a BSOD as an initial PoC that the vulnerability exists. The most straight forward way is to position our user-land buffer at the end of a page of memory, with a guard page adjacent to it. When the driver attempts to write beyond the end of the legitimate buffer, and into the guard page, it will produce a page fault that can’t be handled by the kernel, resulting a BSOD.

This works as expected:

At this point, however, I got a bit confused by the MSRC advisory. The advisory categorises the CVE as a heap-based buffer overflow - but what we are seeing here isn’t really that, it’s an overflow of the System PTE pages, into a user-land buffer. Looking around online, and sending an LLM off to do the same thing, didn’t yield any examples to me of exploits overflowing virtual memory that is mapped for these MDLs.

It’s possible that I’ve misinterpreted the bug ¯\_(ツ)_/¯, or that I’m overlooking some controllable aspect of the I/O request, that changes where this overflow takes place. Alternatively, the MSRC categorisation might be inaccurate, or there’s some technique unknown to me for controlling/influencing where this overflow occurs. Please reach out to me and let me know if this is the case.